API

If you're looking for an API, you can choose from your desired programming language.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

import requests

import base64

# Use this function to convert an image file from the filesystem to base64

def image_file_to_base64(image_path):

with open(image_path, 'rb') as f:

image_data = f.read()

return base64.b64encode(image_data).decode('utf-8')

# Use this function to fetch an image from a URL and convert it to base64

def image_url_to_base64(image_url):

response = requests.get(image_url)

image_data = response.content

return base64.b64encode(image_data).decode('utf-8')

# Use this function to convert a list of image URLs to base64

def image_urls_to_base64(image_urls):

return [image_url_to_base64(url) for url in image_urls]

api_key = "YOUR_API_KEY"

url = "https://api.segmind.com/v1/playground-v2.5"

# Request payload

data = {

"prompt": "(solo), anthro, male, protogen, high detailed fur, smile, hyperdetailed,realistic",

"negative_prompt": "bad anatomy, bad hands, missing fingers,low quality,blurry",

"samples": 1,

"num_inference_steps": 25,

"guidance_scale": 3,

"seed": 36446545871,

"base64": False

}

headers = {'x-api-key': api_key}

response = requests.post(url, json=data, headers=headers)

print(response.content) # The response is the generated imageAttributes

Prompt to render

Prompts to exclude, eg. 'bad anatomy, bad hands, missing fingers,low quality,blurry'

Number of samples to generate.

min : 1,

max : 4

Number of denoising steps.

min : 20,

max : 100

Scale for classifier-free guidance

min : 1,

max : 25

Seed for image generation.

min : -1,

max : 999999999999999

Base64 encoding of the output image.

To keep track of your credit usage, you can inspect the response headers of each API call. The x-remaining-credits property will indicate the number of remaining credits in your account. Ensure you monitor this value to avoid any disruptions in your API usage.

Playground V2.5

Playground V2.5 is a diffusion-based text-to-image generative model, designed to create highly aesthetic images based on textual prompts. As the successor to Playground V2, it represents the state-of-the-art in open-source aesthetic quality. Playground v2.5 excels at producing visually attractive images. It achieves this through advancements in color, contrast and human details.

Technical Details

-

Model Type: Playground V2.5 operates as a Latent Diffusion Model.

-

Text Encoders: It utilizes two fixed, pre-trained text encoders: OpenCLIP-ViT/G and CLIP-ViT/L.

-

Architecture: The model follows the same architecture as Stable Diffusion XL.

-

Resolution: Playground V2.5 generates images at a resolution of 1024x1024 pixels, catering to both portrait and landscape aspect ratios.

-

Scheduler Options: The default scheduler is EDMDPMSolver Multistep Scheduler, which enhances fine details. A guidance scale of 3.0 works well with this scheduler.

Playground V2.5 outperforms SDXL, PixArt-α, DALL-E 3, Midjourney 5.2, and even its predecessor, Playground V2.

Other Popular Models

sdxl-img2img

SDXL Img2Img is used for text-guided image-to-image translation. This model uses the weights from Stable Diffusion to generate new images from an input image using StableDiffusionImg2ImgPipeline from diffusers

sdxl-controlnet

SDXL ControlNet gives unprecedented control over text-to-image generation. SDXL ControlNet models Introduces the concept of conditioning inputs, which provide additional information to guide the image generation process

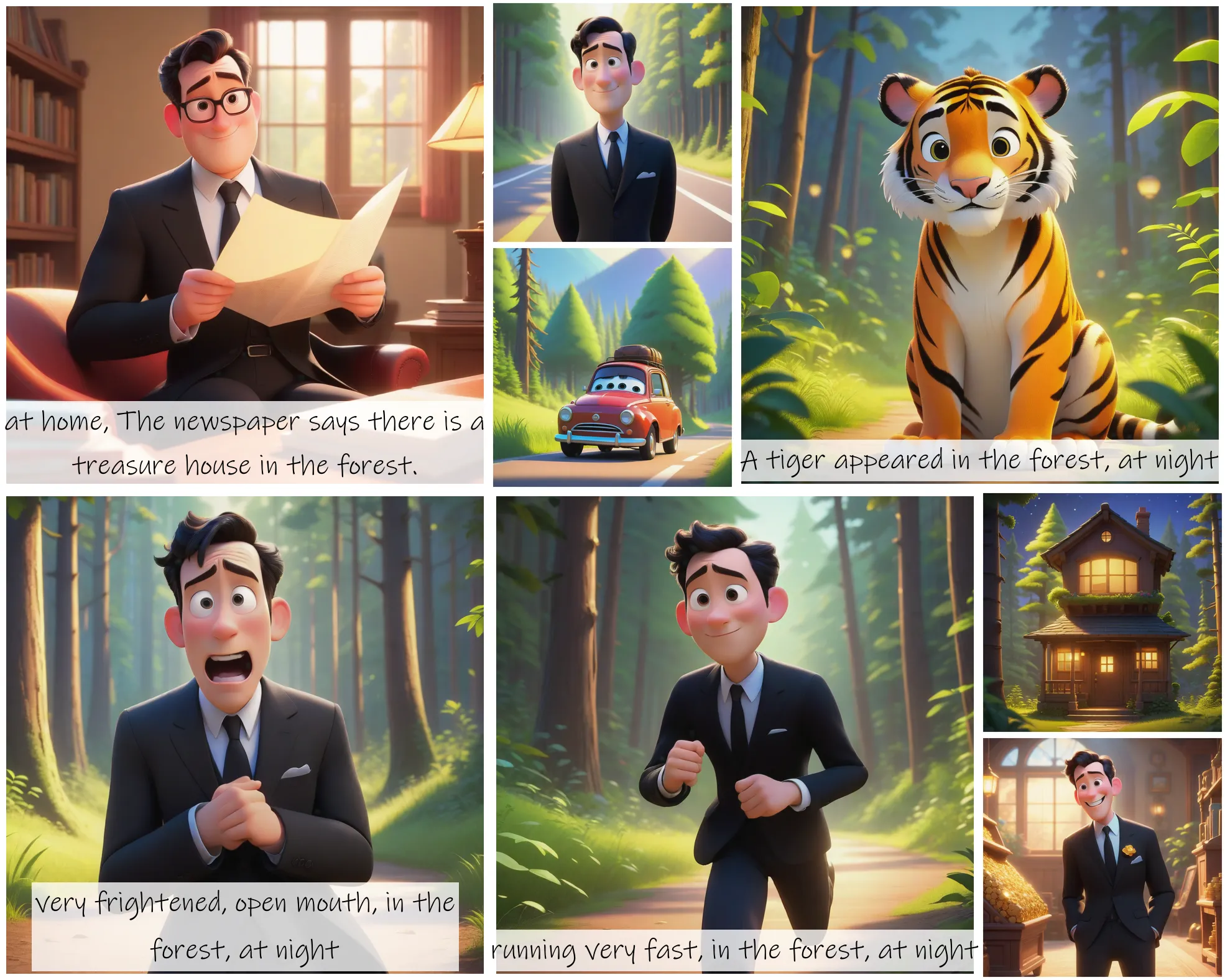

storydiffusion

Story Diffusion turns your written narratives into stunning image sequences.

codeformer

CodeFormer is a robust face restoration algorithm for old photos or AI-generated faces.